Preprocessing

-

Data Cleansing: To eliminate or correct erroneous data by identifying and handling inconsistencies or outliers.

-

Scaling and Normalizing: Numerical features often need to be scaled or normalized to ensure the models can learn effectively. Scaling adjusts the range of features, while normalization brings them within a specific range, such as between 0 and 1.

-

dimensionality Reduction: Reducing the number of features by creating a lower-dimensional representation of the data. It helps to remove noise, improve model performance, and interpretability.

-

Feature Construction: Feature construction involves creating new features from existing ones using various techniques.

Feature Engineering Techniques

Some commonly used techniques:

-

Feature Scaling: Scaling numerical features ensures they are within a similar range, making it easier for models to learn from them.

-

Bucketizing/Binning: Grouping numerical data into buckets or bins can convert continuous values into discrete categories. This technique is useful when encoding numerical data as categories, enabling models to learn patterns based on these groups.

-

dimensionality Reduction: Techniques like Principal Component Analysis (PCA), t-SNE, and UMAP help reduce the number of features while preserving important information.

-

Feature Crosses: Combining multiple features to create new features. This technique allows for the encoding of non-linear relationships or the expression of the same information in fewer features. Feature.

-

Encoding Features: Encoding features involves transforming categorical variables into numerical representations. Techniques like one-hot encoding and categorical embeddings are used to convert categorical data into a format suitable for models.

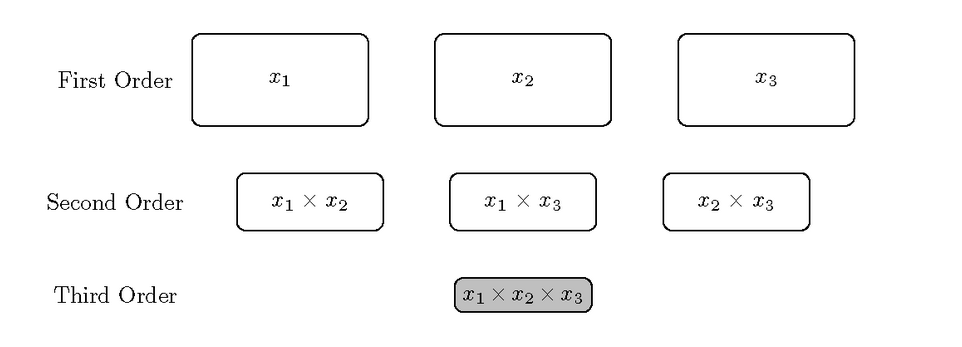

Feature Crosses

Guiding Principles:

- expert-selected interactions should be the first to be explored.

- The interaction hierarchy principle states that the higher degree of the interaction, the less likely the interaction will explain variation in the response.

source: https://bookdown.org/max/FES/interactions-guiding-principles.html

The three-way interaction term is greyed out due to the effect sparsity principle.

source: https://bookdown.org/max/FES/interactions-guiding-principles.html

The three-way interaction term is greyed out due to the effect sparsity principle. - effect sparsity, contends that only a fraction of the possible effects truly explain a significant amount of response variation.

- heredity principle is based on principles of genetic heredity and asserts that interaction terms may only be considered if the ordered terms preceding the interaction are effective at explaining response variation.

Key points:

- Feature crosses combine multiple features together to create a new feature that captures the relationship between them.

- They can be used to encode non-linear patterns in the feature space, allowing models to capture more complex interactions.

- Feature crosses can also reduce the number of features by encoding the same information in a single feature.

- They can be powerful in capturing interactions and improving the predictive capabilities of models.

Example:

- Area = Width x Height

- Hour of week = combination of “hour of day” and “day of week”