Goals

- Some models (such as SVM and Neural Networks) are sensitive to irrelevant features/predictors. superfluous features can sink predictive performance in some situations.

- Some models (such as logistic regression) are vulnerable to correlated features.

- removing features can reduce cost and it make scientific sense to include the minimum possible set that provides acceptable results.

Classes of Feature Selection Method

in general feature selection method can be divided by 3

Info

title: Intrinsic feature selection naturally incorporated with the modeling process.

Examples:

- Tree and rule-based models. search the best predictors and split point such that the outcomes are more homogeneous within each new partition.

- Multivariate adaptive regression spline (MARS)

- Regularization models. penalizes or shrinks predictor coefficient used by the model (in some cases such as with L1 regularization to absolute zero)

Cons: model dependent

Info

title: filter methods conduct an initial supervised analysis to the feature/predictors to determine which are important, and only provide these to the model.

is a greedy feature selection approach

Pros: Simple and fast, effective at capturing the large trends (i.e., individual predictor-outcome relationship)

Cons:

- prone to over-selecting predictor

- in many case some measure of statistical significance is used to judge “importance” and may be disconnected with the actual predictive performance

Info

title: wrapper methods iterative search procedure that repeatedly supply predictor subsets to the model, and use the model performance as the guide to select which subsets to supply and evaluate next.

can be greedy or global feature selection approach

Pros:

the one that have most potential to find the global optima of subset if they exist (especially non-greedy one)

Cons:

Computationally Costly

Recommended Approach

Tip

start with one or more intrinsic approach and see what they yield

- If non-linear intrinsic method has a good performance: proceed with a wrapper method with a non-linear model

- similarry if linear intrinsic method has a good performance: proceed with a wrapper methode with a linear model

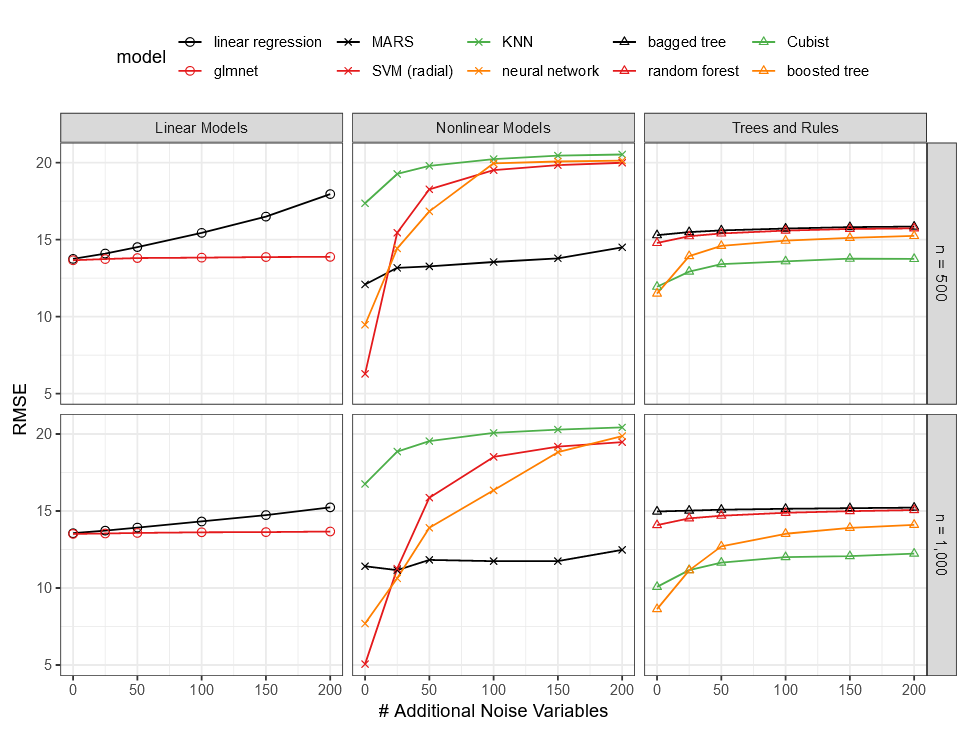

Effect of Irrelevant Features

irrelevant features can have effect to a model depend on the type of the model itself

below is example of aditional irrelevant/noise features vs RMSE (x axes is number of additional noise features)

image source: https://bookdown.org/max/FES/feature-selection-simulation.html

original y consist of nonlinear function from a 20 predictors

$$y=x_1+sin(x_2)+log(|x_3|)+x_4^2+x_5x_6+I(x_7x_8x_9<0)+I(x_{10}>0)+x_{11}I(x_{11}>0)+√(|x_{12}|)+cos(x_{13})+2x_{14}+|x_15|+I(x_{16}<−1)+x_{17}I(x_{17}<−1)−2x_{18}−x_{19}x_{20}+ϵ $$

image source: https://bookdown.org/max/FES/feature-selection-simulation.html

original y consist of nonlinear function from a 20 predictors

$$y=x_1+sin(x_2)+log(|x_3|)+x_4^2+x_5x_6+I(x_7x_8x_9<0)+I(x_{10}>0)+x_{11}I(x_{11}>0)+√(|x_{12}|)+cos(x_{13})+2x_{14}+|x_15|+I(x_{16}<−1)+x_{17}I(x_{17}<−1)−2x_{18}−x_{19}x_{20}+ϵ $$