Infrastructure for Model Serving

two main options for infrastructure:

-

On-Premises: In this approach, you have all the hardware and software required for running your models on your own premises.

- pros: provides you with complete control and flexibility to adapt to changes quickly.

- cons: it can be complicated and expensive to procure, install, configure, and maintain the hardware infrastructure.

-

Cloud Provider: Alternatively, you can outsource your infrastructure needs to a cloud provider like Amazon Web Services, Google Cloud Platform, or Microsoft Azure.

- pros: Cloud providers manage the hardware infrastructure for you, offering services such as pipeline management.

- cons: sometimes can be pricier.

Model Servers

Model servers are responsible for instantiating machine learning models and exposing the desired methods to clients. Some popular model servers include:

- TensorFlow serving: Allows serving TensorFlow models and provides an API for clients to make predictions.

- Torch Serve: Serves PyTorch models and offers an interface for making predictions.

- Kubeflow Serving: Specifically designed for serving models in a Kubeflow environment.

- NVIDIA Triton Inference Server: A server optimized for NVIDIA GPU-accelerated models.

Optimization

Strategies for Optimization

There are several areas where optimization can be applied:

-

Infrastructure: Scale the infrastructure by adding more powerful hardware or using containerized and virtualized environments. This allows for efficient resource allocation and scaling based on demand.

- Vertical Scaling: It involves using more powerful hardware resources by upgrading CPUs, adding more RAM, or using newer GPUs.

- Horizontal Scaling: It involves adding more devices as the workload increases.

-

Model Architecture: Understand the trade-off between inference speed and accuracy. If a slightly less accurate model significantly improves latency, it may be worth considering. Consider model resource optimization

-

Model Compilation: If the target hardware is known, perform model compilation to create a model artifact and execution runtime optimized for that hardware. This can reduce memory consumption and latency.

-

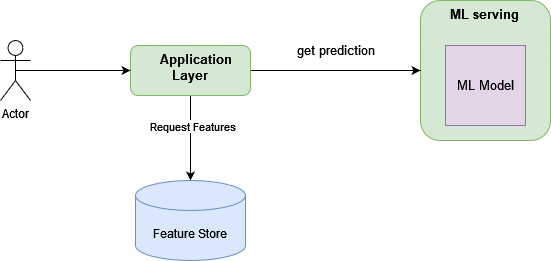

Application Layer: Optimize the application layer by caching frequently accessed data (feature store) or precomputing certain predictions. For example, popular product recommendations can be stored in faster data storage (key-value store) to minimize database hits.